Python generators are a powerful feature that allows lazy iteration through a sequence of values. They produce items one by only when needed, making them the best choice for working with large data sets or data streams where it would be inefficient and impractical to load everything into memory at once.

How to Define and Use Generators

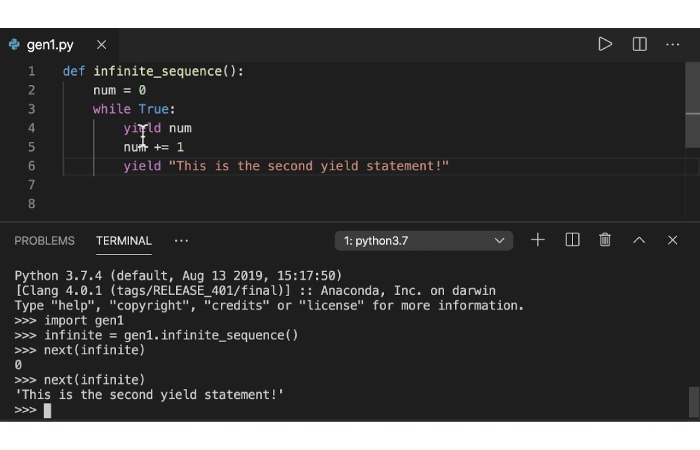

To define a generator, you can use the def keyword as you would a normal function. However, instead of returning a value with return, we use yield.

Here, the yield keyword is used to produce a value and pause the execution of the generating function. When the function resumes, it continues execution immediately after the return statement.

Example: Simple Generator

def simple_generator(n):

i = 0

while i < n:

yield i

i += 1

# Using the generator

gen = simple_generator(5)

for number in gen:

print(number)

Output:

0

1

2

3

4

When the simple_generator() function is called, it does not execute your code. Instead, it returns a generator object that contains an internal method called __next__(), which is created when the generator function is called.

The generator object implicitly uses this method as an iteration protocol when we iterate through the generator.

Benefits of Using Generators

Python generators offer several advantages that significantly improve code efficiency and readability. By efficiently producing elements on the fly, generators optimize memory usage and improve performance over traditional iterative methods.

Let’s explore some of these benefits in detail, highlighting how generators speed up Python development and improve code quality.

Memory optimization

Compared to lists that load all elements into memory at once, generators are memory usage optimizers. They produce items one by only when necessary.

Below is an example that considers a scenario where we need to generate a large list of numbers:

# Using a list

numbers = [i for i in range(1000000)]

# Using a generator

def number_generator():

for i in range(1000000):

yield i

gen_numbers = number_generator()

With a list, all 1,000,000 numbers are stored in memory at a time, but with a generator, numbers are produced one by one, which reduces memory usage.

Enhanced Performance

Because generators produce items on the fly, they improve performance, especially in terms of speed and efficiency. They can start delivering results immediately without waiting to process an entire data set.

In this example, suppose we need to process each number in a sequence:

# Using a list

numbers = [i for i in range(1000000)]

square_numbers = [x**2 for x in numbers]

# Using a generator

def number_generator():

for i in range(1000000):

yield i

def squared_gen(gen):

for num in gen:

yield num**2

gen_numbers = number_generator()

squared_gen_numbers = squared_gen(gen_numbers)

When we use a list, we generate all the numbers and then process them, which takes more time. However, with the generator, each number is processed as soon as it is generated, making the process more efficient.

Simplicity and readability of the code.

Generators help write clean and readable code. They allow us to describe an iterative algorithm without the need for repetitive code to manage the state of the iteration. Consider a scenario where we need to read lines from a large file:

# Using a list

def read_large_file(file_name):

with open(file_name, ‘r’) as file:

lines = file.deadlines()

return lines

lines = read_large_file(‘large_file.txt’)

# Using a generator

def read_large_file(file_name):

with open(file_name, ‘r’) as file:

for line in file:

yield line

lines_gen = read_large_file(‘large_file.txt’)

With the list method, we read all the lines into memory at once. With the generator, we read and render one line at a time, making the code simpler and more readable while also helping to save memory.

Practical use cases

This section explores some practical use cases where Python generators excel and discovers how generators simplify complex tasks while optimizing performance and memory usage.

Stream processing

Generators are great for handling continuous streams of data, such as real-time sensor data, log streams, or live streams from APIs. They ensure efficient processing of data as it becomes available, without the need to store large amounts of data in memory.

import time

def data_stream():

“Simulate a data stream.

for i in range(10):

time. sleep(1) # Simulate data arriving every second

yield 1

def stream_processor(data_stream):

“Process data from the stream.

For data in data_stream:

processed_data = data * 2 # Example processing step

yield processed_data

# Usage

stream = data_stream()

processed_stream = stream_processor(stream)

for data in processed_stream:

print(data)

In this example, the data_stream() method outputs data at regular intervals, simulating a continuous data stream. Stream_processor() processes each data as it arrives, demonstrating how generators can efficiently handle streaming data without needing to load all the data into memory at once.

Iterative Algorithms

Generators provide a simple way to define and run iterative algorithms that involve repetitive calculations and simulations. They allow us to maintain iteration state without manually managing loop variables, which can improve code clarity and maintainability.

def fibonacci_generator():

a, b = 0, 1

While True:

yield a

a, b = b, a + b

# Usage

fib_gen = fibonacci_generator()

for i in range(10):

print(next(fib_gen))

In the example above, the fibonacci_generator() method defines a generator that produces Fibonacci numbers indefinitely. Returns each Fibonacci number one by one, starting with 0 and 1.

Here, the for loop is repeated 10 times to print the first 10 Fibonacci numbers, demonstrating how generators can efficiently generate and manage sequences without preloading them into memory.

Real-time simulator

In this example, we will simulate real-time updates to the price of a stock. The generator will produce a new stock price at each step based on the previous price and some random fluctuations.

import random

import time

def stock_price_generator(initial_price, volatility, steps):

“Generates stock prices starting from initial_price with given volatility.

price = initial_price

for _ in range(steps):

# Simulate price change

change_percent = random.uniform(-volatility, volatility)

price += price * change_percent

yield price

time.sleep(1) # Simulate real-time delay

# Create the stock price generator

initial_price = 100.0 # Starting stock price

volatility = 0.02 # Volatility as a percentage

steps = 10 # Number of steps (updates) to simulate

stock_prices = stock_price_generator(initial_price, volatility, steps)

# Simulate receiving and processing real-time stock prices

For price in stock_prices:

print(f”New stock price: {price:.2f}”)

This example generates the price of each stock on the fly based on the previous price and does not store all generated prices in memory, making it efficient for long-running simulations.

The generator provides a new stock price at each step with minimal calculation. Time. Sleep (1) simulates a real-time delay, allowing the system to handle other tasks simultaneously if necessary.

Summary

In summary, Python generators provide efficient memory management and improved performance, simplifying code while addressing various tasks such as stream processing, iterative algorithms, and real-time simulation.

Their ability to optimize resources makes them a valuable tool for modern Python developers looking for elegant and scalable solutions.

We hope this exploration of Python generators gives you the information you need to exploit their full potential. If you have any questions or would like to discuss further, please feel free to contact me on LinkedIn. You can also contribute to my YouTube channel, where I share videos about coding techniques and projects I’m working on.